Owen Hoffman

Human-centered AI & Robotics for Safe Interaction

I build interactive AI + robot systems that behave predictably and safely in real environments.

About Me

Hi, I'm Owen Hoffman, a double major in engineering and computer science, passionate about human-AI interactions, HCI/HRI, and autonomous systems. I am completing my bachelor's degree at Swarthmore College, where I conducted HCI research and presented at international workshops, and submitted a full-conference paper to ACM CHI. My work focuses on developing LLM-agent-driven CUIs, and I would love to push this work to the physical world. I have experience with Python, ROS, MATLAB, and embedded systems. I am applying to the MIT Media Lab to create embodied, tangible AI systems that can interact socially with humans while prioritizing safety.

ScamPilot: Simulating Conversations with LLMs to Protect Against Online Scams

Conditionally Accepted at ACM CHI ‘26

Role: led a team of five researchers. I created the innovative system prompting pipeline, interaction flow for the conversational user interface (CUI), backend database, the user study design, and statistical analysis. I led the team through the paper-writing process and revisions.

Approach

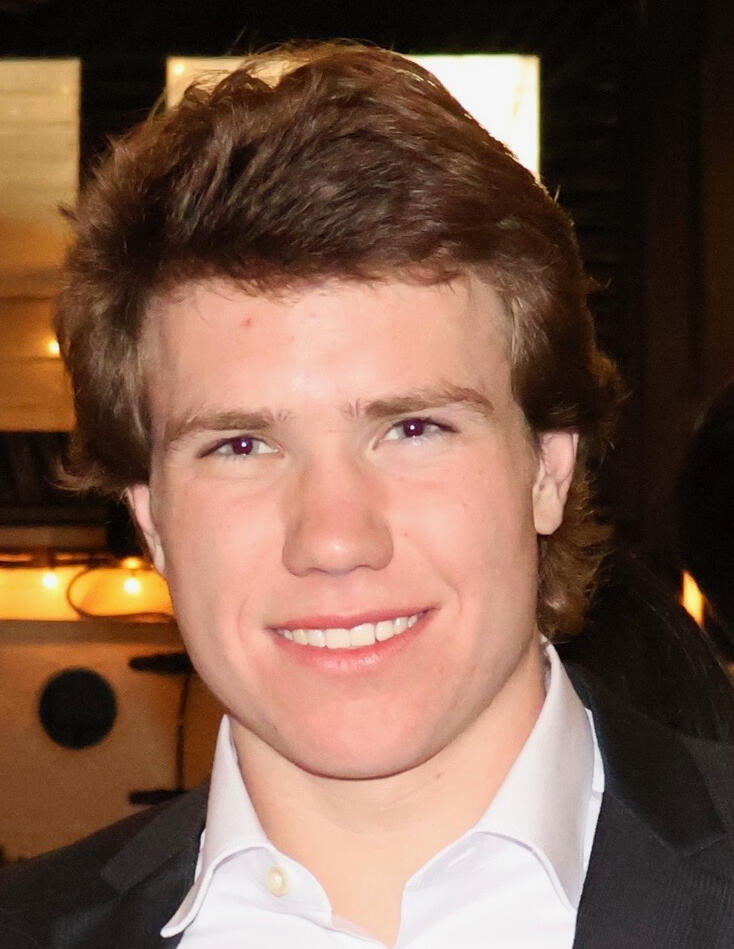

- Created a CUI driven by LLM agents to create a scam conversion to inoculate users against the tactics of scammers.

- Places the user in an advisory role so they can learn how to advise people in scams while also picking up the tactics of scammers

- Users interact with the CUI through embedded quiz questions and the advice they provide to the target

- Provided real-time feedback through a feedback LLM to show users what they did well or how they could improve their advice

Interface Tutorial

Prompting Pipleine for the LLM Agents

Methods

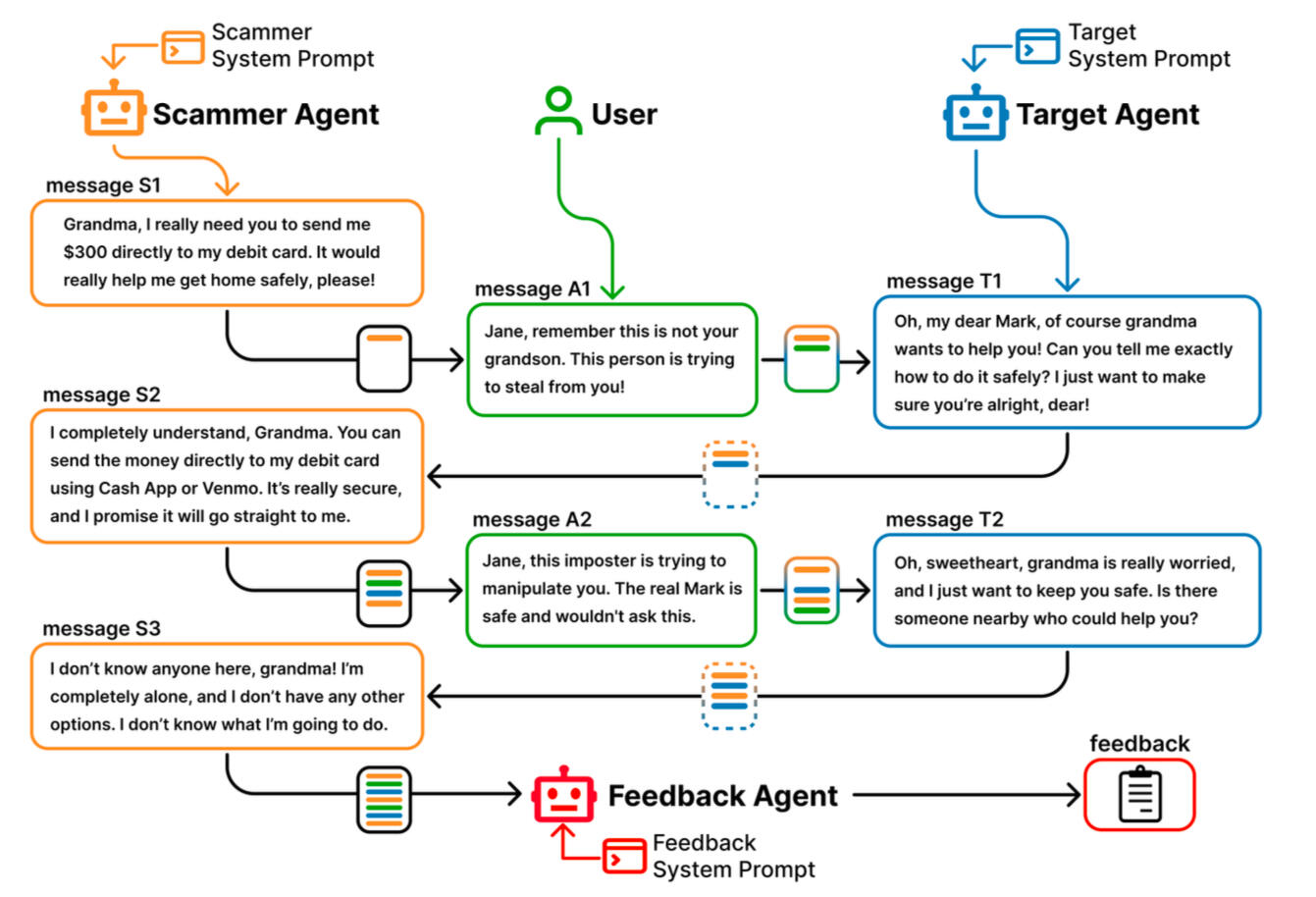

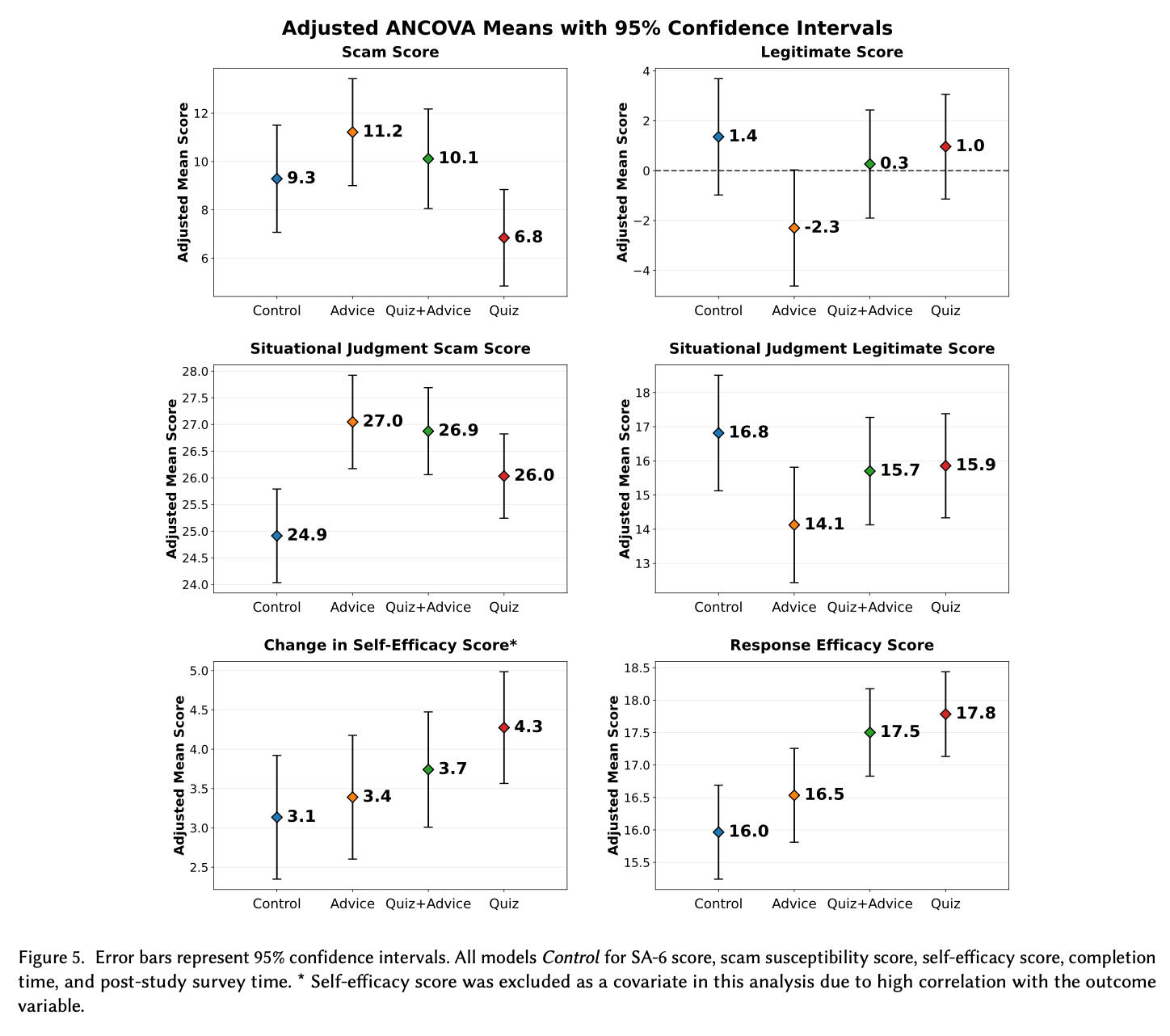

- Evaluated the system using a mixed methods approach with four groups, with one control group

- Participants were randomly placed into one of the four groups

- Evaluated users' near transfer scam discernment, far transfer scam discernment, response efficacy, and self-efficacy

Results

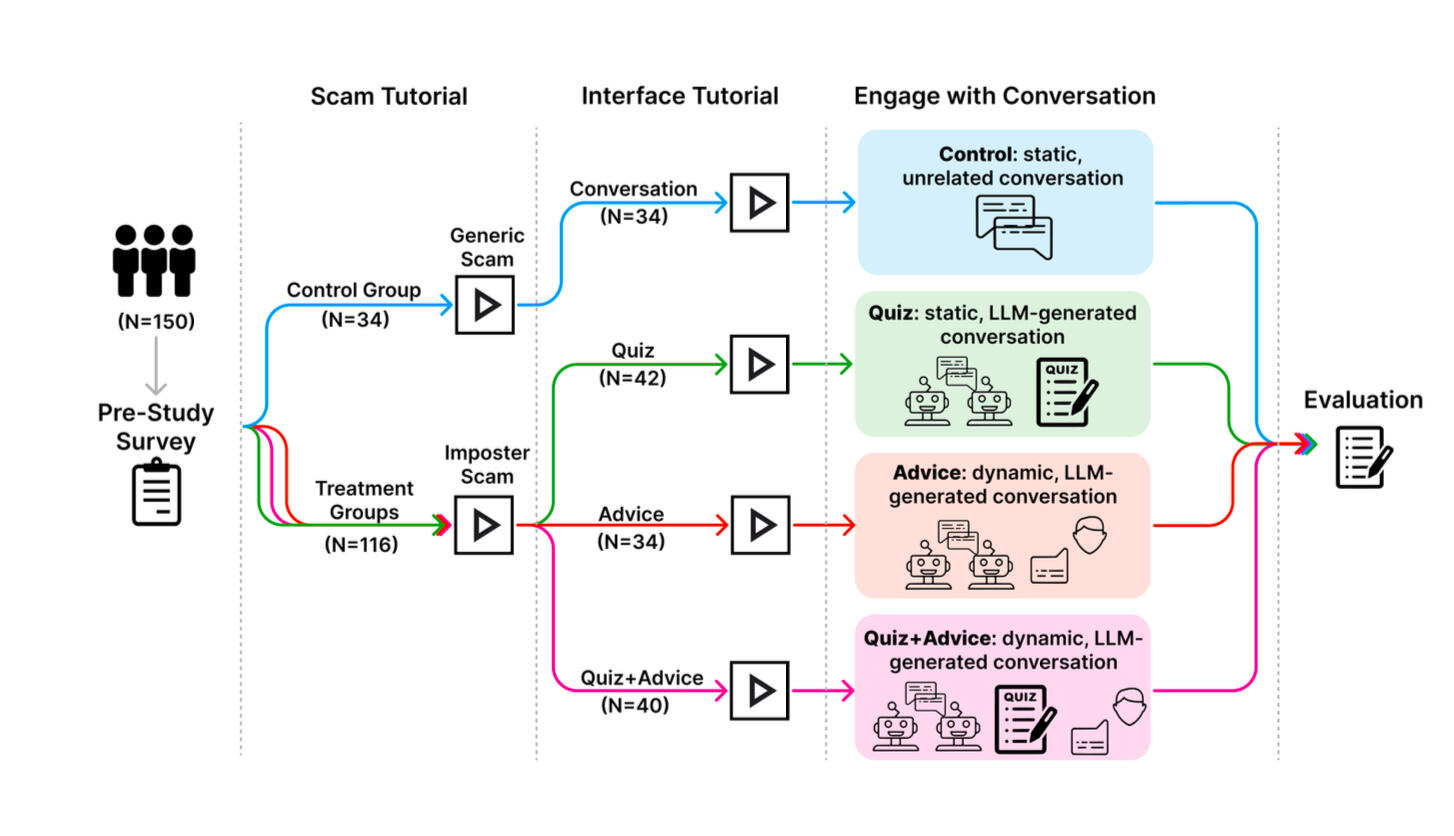

- Found that interface condition was a significant predictor for Situational Judgement Scam score, Response Efficacy, and Scam Score

- Our experimental interface led to increased scam recognition, users' confidence, and users' confidence in the system, while not significantly making people more skeptical of real situations

TurtleBot Autonomy Projects

Role: Coded the majority of the controllers and algorithms for the following TurtleBot projects

Maze Solver using BFS Algorithm

Goal: Make a TurtleBot algorithm which can autonomously navigate a maze

Approach

- Take a maze, create a Djikstra path, turn this path into a series of actions, which uses PD control to know when to make the turn

- Implemented/used PD control to regulate heading and distance errors, then composed these primitives into longer behaviors for maze traversal.

- Estimated a dominant wall/corridor orientation from range data and used it to compute a continuous heading error for closed-loop alignment

Results/Takeaways

- Able to decrease the completion by 11 seconds with fine tuning

- Increasing the speed made the robot more jerky showing the tradeoff between speed and smoothness

TurtleBot Solving Maze A

TurtleBot Solving Maze B

Autonomous TurtleBot Cone-Course Navigation

Goal: Autonomously navigate a cone slalom course with a TurtleBot by detecting cones and tracking a generated path using a pure pursuit controller.

Approach

- Integrated a blob finder algorithm with wheel-encoder odometry to implement a pure-pursuit controller for real-time course navigation

- Fine-tuned the look-ahead distances and linear/angular velocities to create safer, predictable controls

Pipeline

Camera → cone detections → centerline/waypoints → pure pursuit → linear/angular velocity commands → robot trajectory

Results/Takeaways

- Created an autonomous TurtleBot algorithm that can navigate a cone course

- Performed the robot in front of an audience, and it was able to adjust to the course being moved around

- Showed how important fine-tuning robotic algorithms is because small changes could lead to drastically different outcomes

TurtleBot Soccer

Goal: Implement an algorithm that can detect a ball and cone goal, then line itself up and hit the ball through the goal

Approach

- Detect the ball and goal to estimate their position in the real world

- Create a "kick pose" behind the ball in the goal frame, then map it to the world frame via rigid transforms

- Implemented controllers to reach the kick pose and align for the shot

Two Examples of working Algorithm

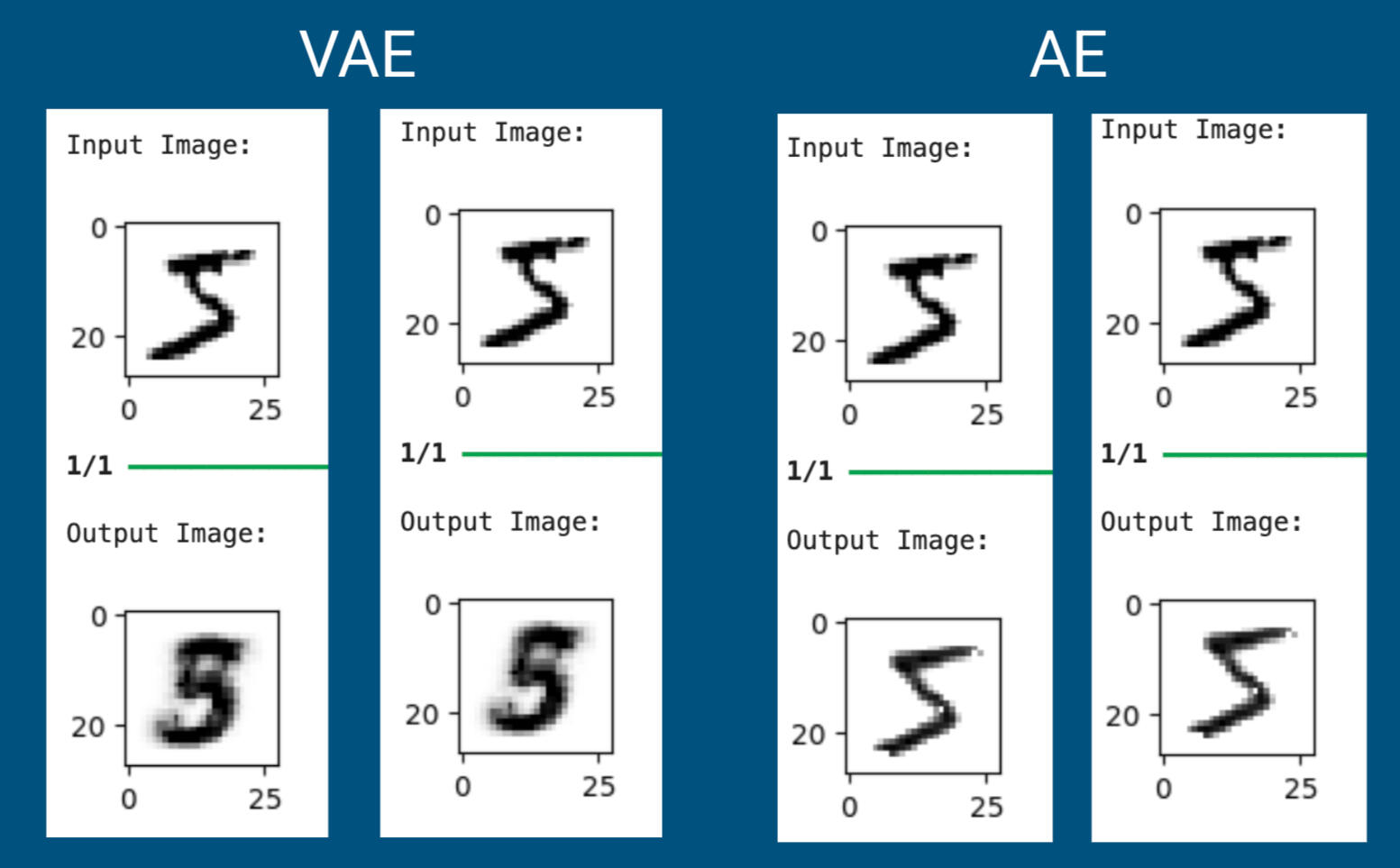

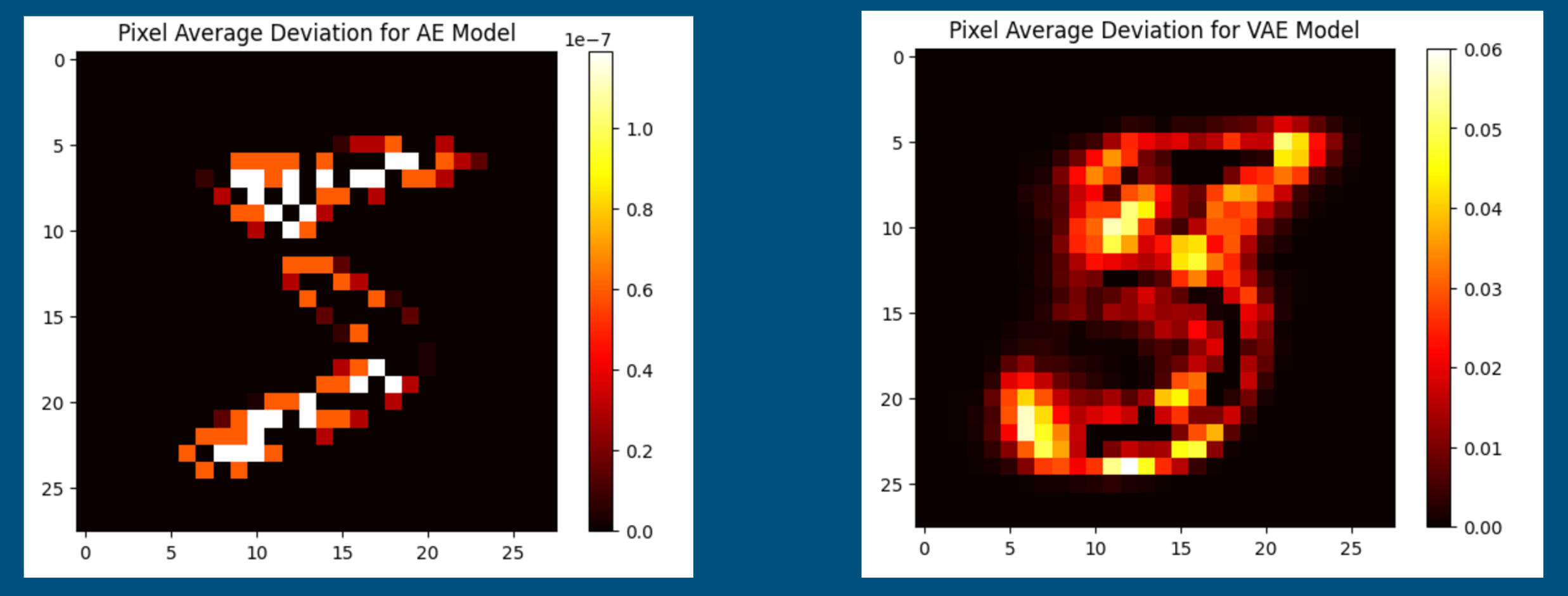

Basic Autoencoder Vs. Variational Autoencoder

Project: Created an autoencoder and a variational autoencoder to compare them on the MNIST dataset, along with two other students

Overview

- Using TensorFlow's Keras, created an autoencoder and a variational autoencoder

- Showed how basic autoencoders have deterministic outputs while variational autoencoders have probabilistic outputs

- Showed how autoencoders can be used for anomaly detection based on high reconstruction loss

- Showed how the variational autoencoder's output is non-determinstic

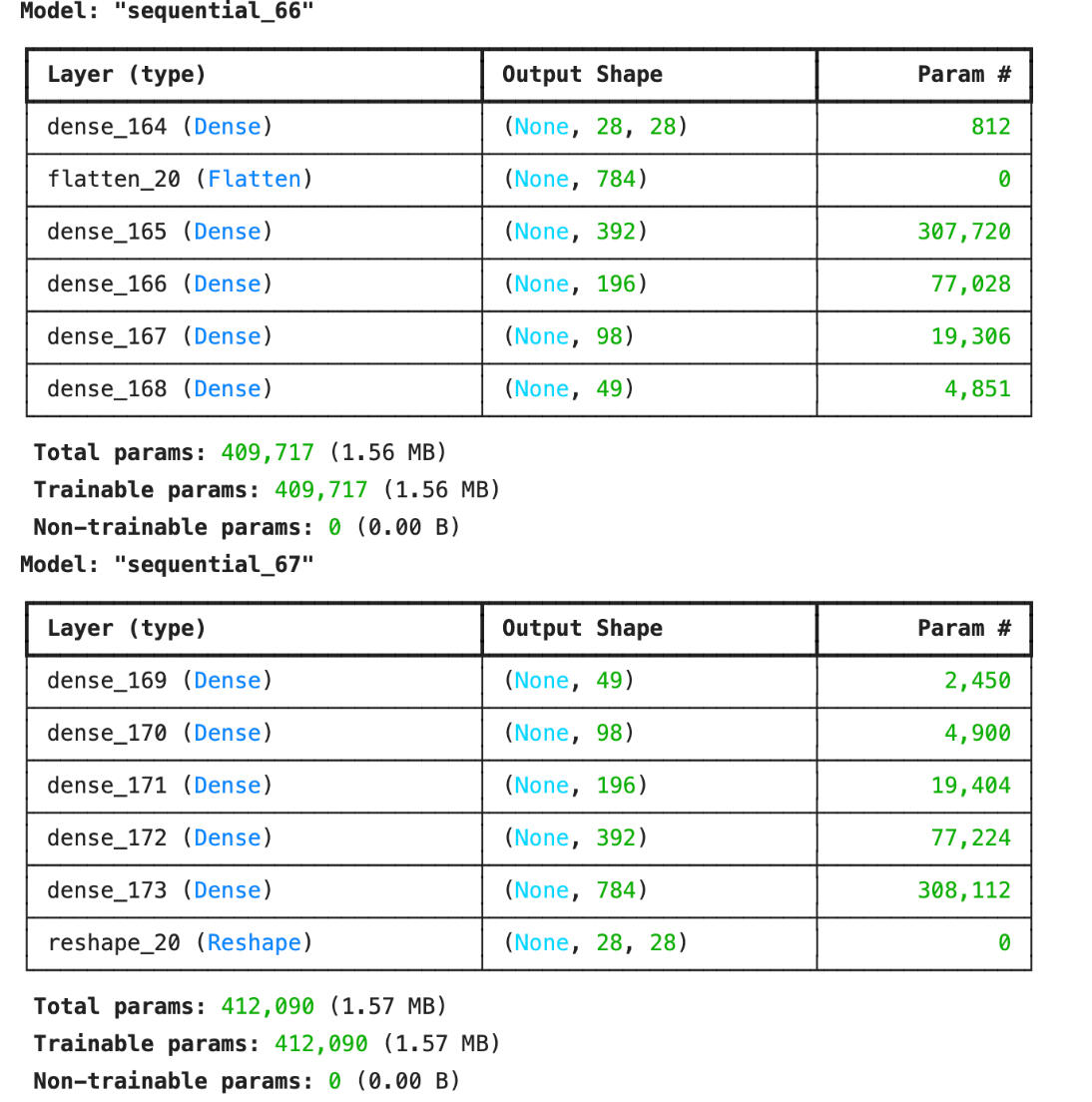

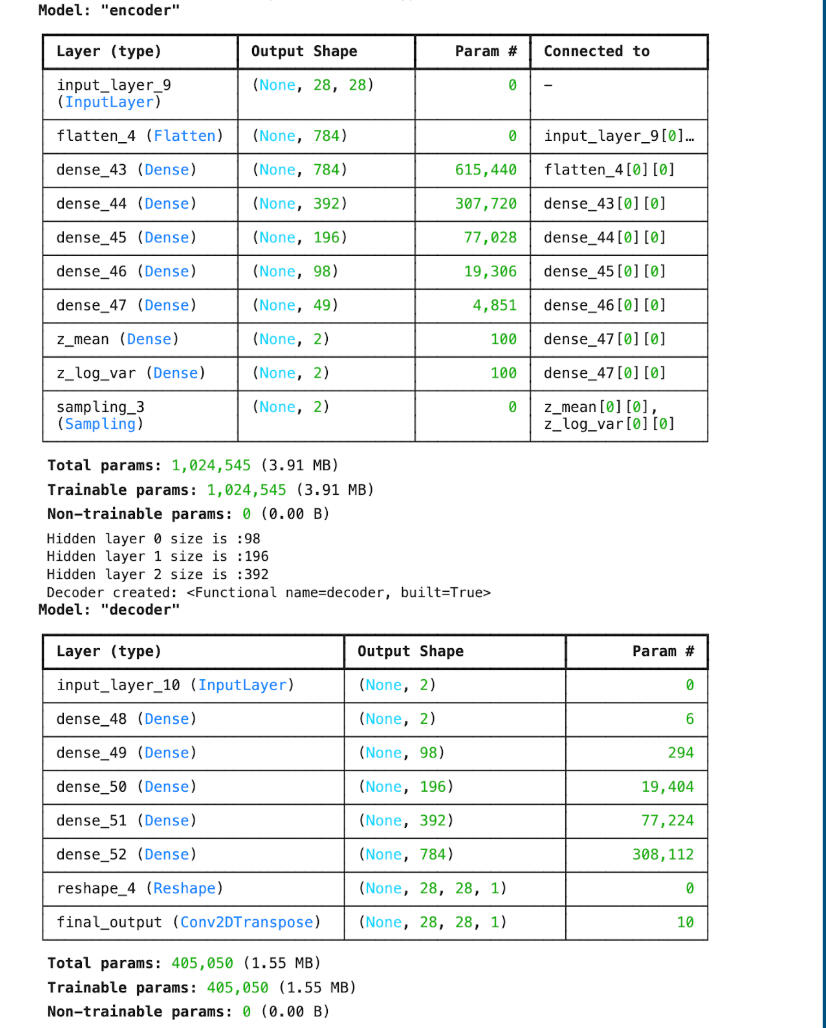

Model Architecture

Basic Autoencoder

Variational Autoencoder

Yes/No Speech Recognition System

Project: I created a "Yes"/"No" speech dectection using MATLAB

Overview

- Using MATLAB's classificationLearner tool based on key spectral and temporal features, MFCCs, zero-crossing rate, RMS energy, spectral flux, centroid

- Used a 20% train-test split to decrease overfitting of the algorithm

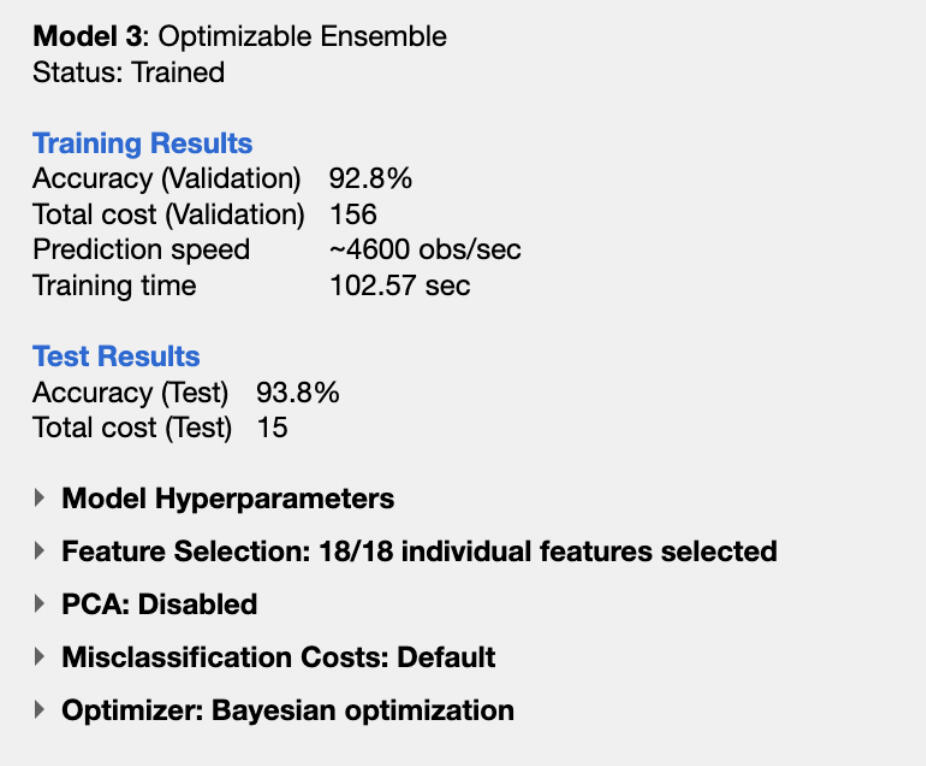

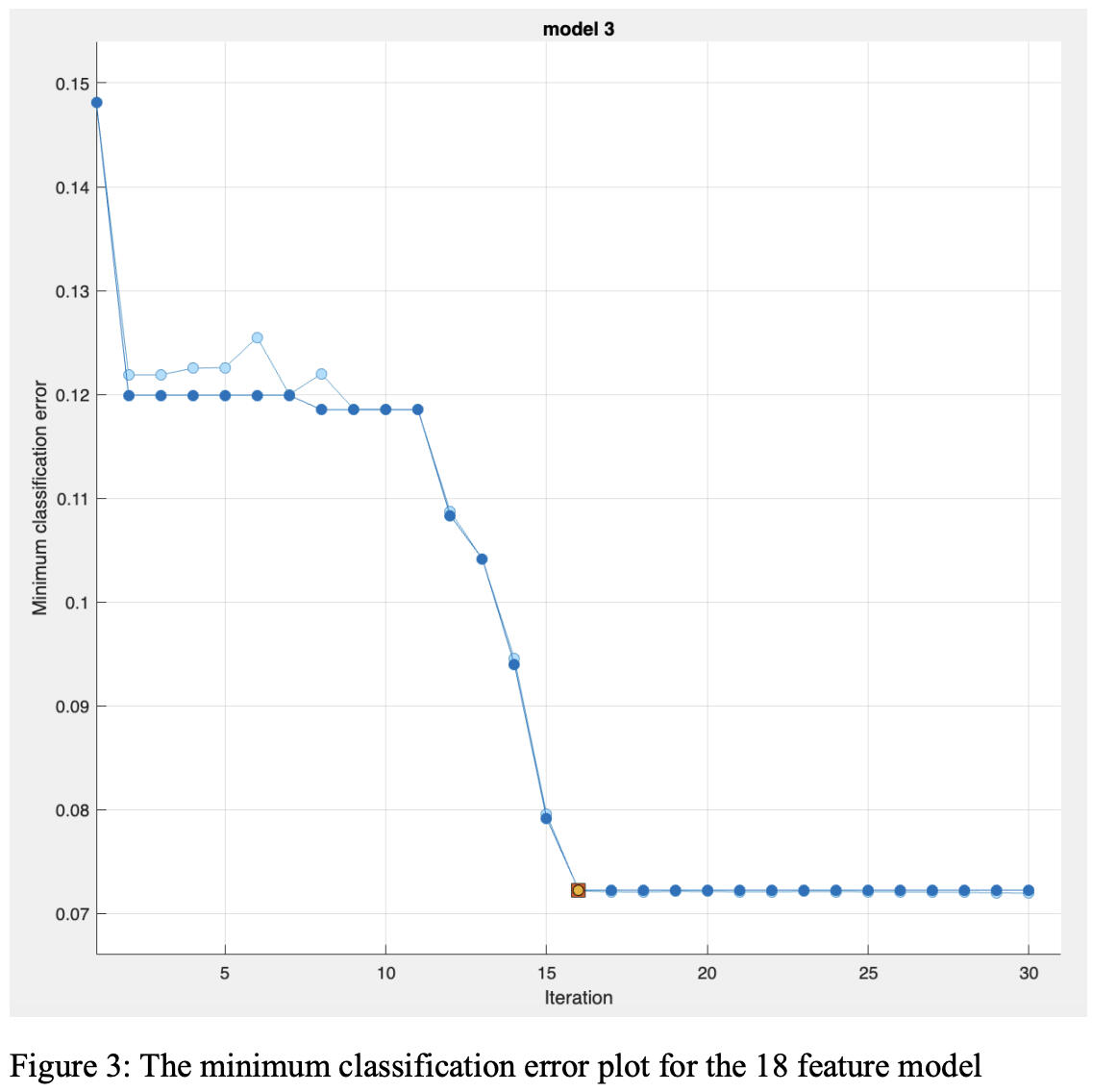

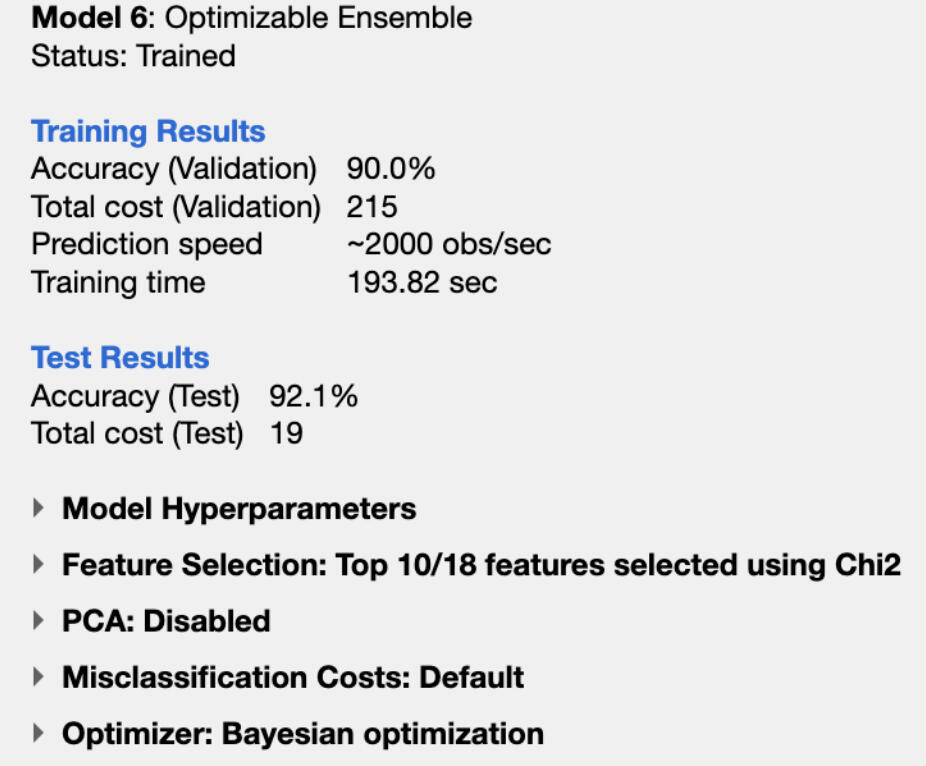

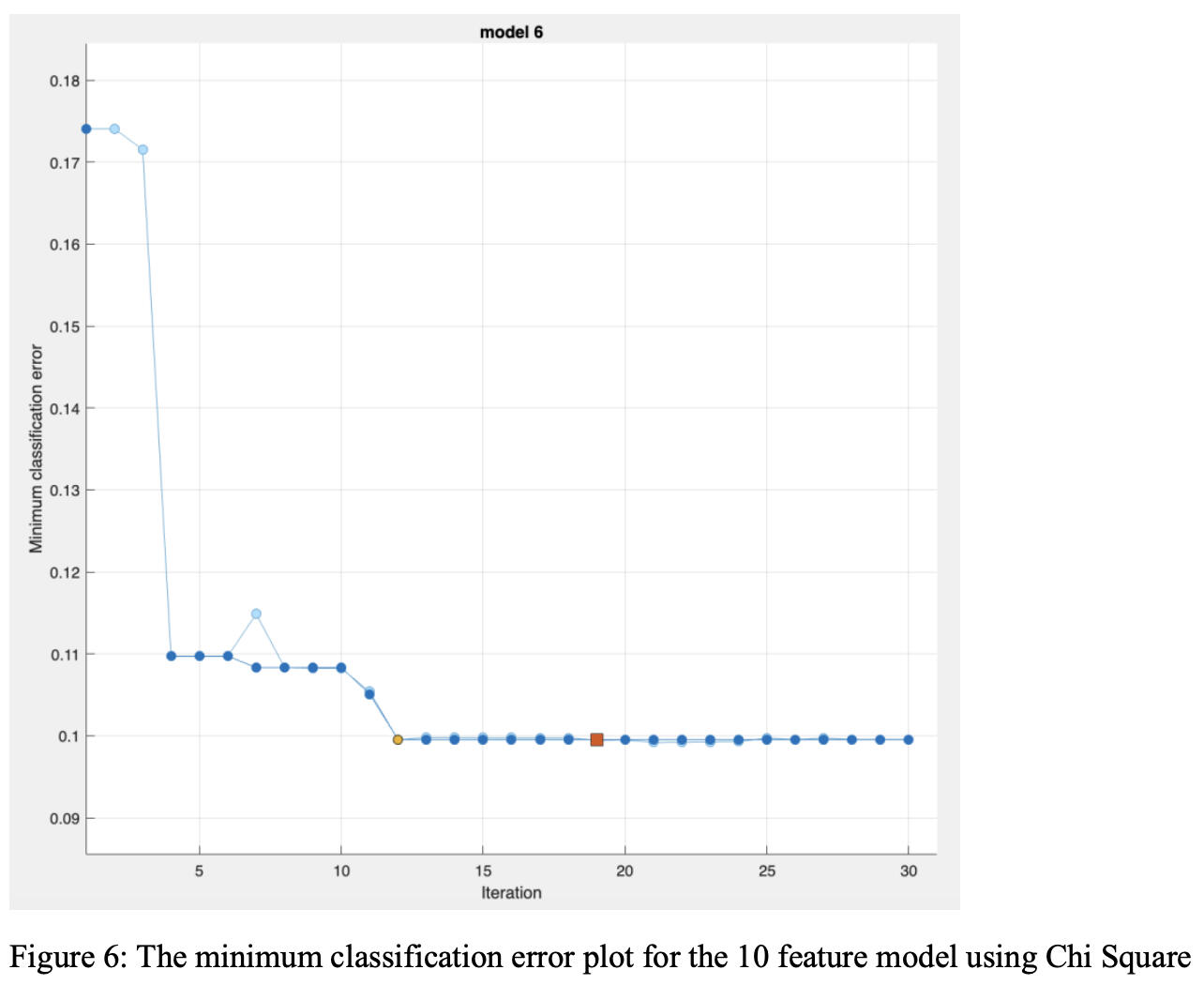

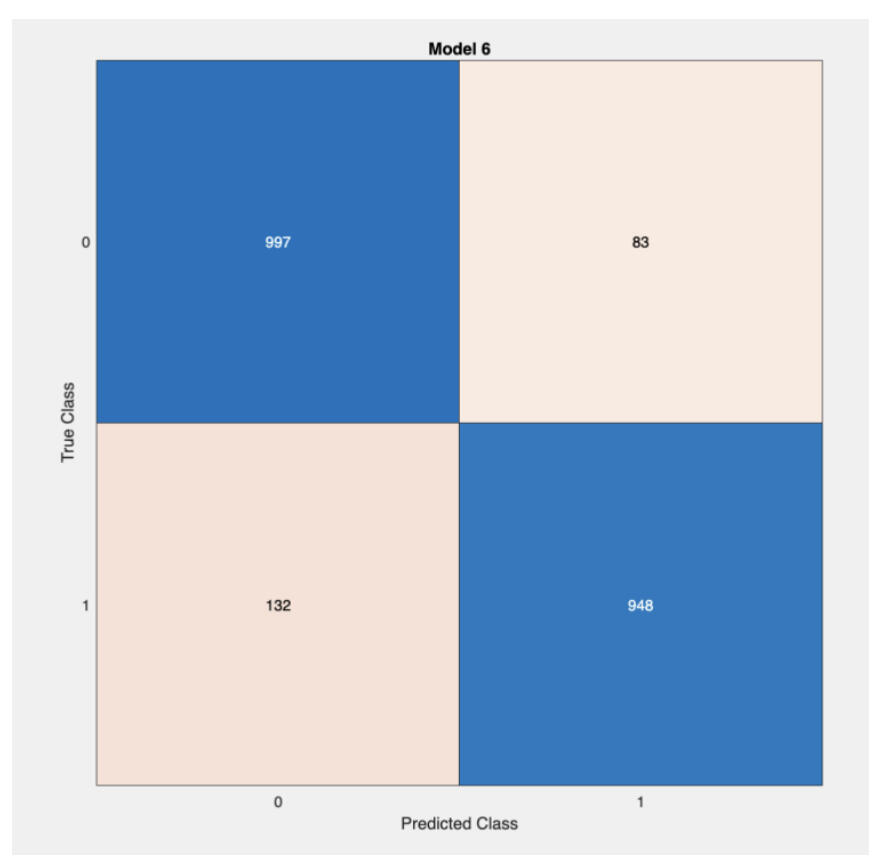

- The model with the highest accuracy was an optimizable ensemble where I got 93.8% test set accuracy with all features, and 92.1% test set accuracy with the 10 best features

- Shows that picking the best features can create models almost as good, while having an overall smaller model

18 Feature Model

10 Feature Model

Confusion Matrix for 18 Feature Model

Curriculum Vitae